Kubernetes 1.15 Is Here

Kubernetes 1.15 is (as of summer 2019) the latest release of the open source Kubernetes platform used to automate Linux containers cluster management and orchestration. The platform, originally designed and developed by Google software engineers, has become the go-to tool for Agile and DevOps teams, including our own, to host cloud-native applications that have rapid, and cost efficient, scalability is a requirement.

With speed, agility, scalability, reliability, and security more often than not fundamental requirements for cloud-native apps, Kubernetes has become one of the most prolifically utilised DevOps technologies. Increasingly complex apps comprised of multiple microservices also require increasingly efficient management if high availability is to be maintained without cloud resource expenses becoming incompatible with business models – with Kubernetes again riding to the rescue.

In this post we’ll combine an overview of what is new in the recent Kubernetes 1.15 release, how updates improve on previous iterations of the platform, specific use cases and a technical step-by-step guide to setting up Kubernetes 1.15 to run in high-availability mode.

What’s New In K8s 1.15?

With 10 new Alpha features introduced, 13 of the Kubernetes 1.14 Alphas graduating to Beta and 2 stable features fully releases in Kubernetes 1.15, covering all of what is new in this release would be a serious undertaking. But a couple of highlights are:

The Ability to Create Dynamic HA Clusters With Kubeadm

This feature has moved to Beta from Alpha in K8s 1.15.

The kubeadm tool allows for the creation of Dynamic HA clusters using the familiar kubeadm init and kubeadm control commands. The difference is that the – – control-plane flag has to be passed first.

kubeadm init --config=kubeadm-config.yaml --upload-certs

Extension of Permitted PVC DataSources – Volume Cloning

- A new net Alpha for Kubernetes 1.15. Users can now indicate they would like to Clone a Volume as a result of the addition of support for specifying existing PVCs.

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: pvc-clone

Namespace: demo-namespace

spec:

storageClassName: csi-storageclass

dataSource:

name: src-pvc

kind: PersistentVolumeClaim

apiGroup: ""

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 1GiAdmission Webhooks

- Beta work continues on Updated Changes in release 1.15. Admission webhook extends Kubernetes by placing a hook on any object creation, deletion or modification. An object can be mutated or validated through admission webhooks. Admission webhooks have also been extended to single objects.

Custom Resources Defaulting and Pruning

- This feature is a Net New Alpha for K8s 1.15. Most native Kubernetes API types see defaulting implemented. This plays a key role in maintaining API compatibility when new fields are added. CustomResources don’t support this natively. The feature adds support for specifying default values for fields via OpenAPI v3 validating schemas in the CRD manifest.CustomResources store arbitrary JSON data without the need for the usual Kubernetes API behaviour to omit unknown fields. The lack of clarity around what is actually stored in etcd. differentiates CRDs and also adds security and general data consistency risk. The feature automates the omission of any fields not specified in the CRD’s OpenAPI validation schemas.

Webhook Conversion for Custom Resource Definitions

- A previously Alpha feature that has graduated to Beta in 1.15. Offers Kubernetes resources defined through CRD version-conversion support. Before committing to the development of a CRD + controller, users of CRD require security they will be able to evolve their API. While CRD can support multiple versions there is no conversion between those versions. This new feature adds an external webhook-facilitated CRDs conversion mechanism. It details API changes, use cases and upgrade/downgrade scenarios.

CRD OpenAPI Schema Publishing

- Another Alpha feature from 1.14 promoted to Beta for Kubernetes 1.15. Client-side validation, schema explanation and client generation for CRs are enabled by the Publishing CRD OpenAPI feature. This bridges the gap between native Kubernetes APIs, which already support OpenAPI documentation, and CR. Publish Paths and Definitions in OpenAPI documentation show the APO for every CRD served. In the case of schema-defined CRDs, CR object Definition should now include CRD schema, native Kubernetes ObjectMeta and TypeMeta properties. If CRDs are lacking schema, CR object definition is completed to the extent possible while maintaining OpenAPI spec compatibility and supported K8s components.

Add Watch Bookmarks Support

- Another completely new feature with Net New Alpha status in K8s 1.15 It makes the restarting of watches more cost effective in terms of kube-APIserver performance. Scalability testing has shown that reinitiating watches can add a significant load to the kube-APIserver in the case a watcher sees small changes eg. because of the field or label selector. Reestablishing one of these watchers can lead, in more extreme cases, to “resource version too old” error or a complete drop out of the history window. Minimising watch events processed after a restart by omitting all unnecessary events reduces the load on the APIserver. This cuts out a majority of “resource version too old” errors. Bookmark is a new kind of watch event that represents information of all objects up to a particular resource version processed for a particular watcher.

Other New Kubernetes 1.15 Features

Above are a few of the 1.15 release’s highlights. Other new features include new capabilities and tweaks now fully introduced into Kubernetes ‘Stable’ updates include Go module support to k8s.io/kubernetes kubectl get and describe working well with extensions.

A non-preempting option has also been added to PriorityClasses. And there are a host of new Alpha and Beta updates that can be expected to graduate to Stable Kubernetes elements in future releases.

A full description of the new Kubernetes 1.15 features, across Alpha and Beta into Stable, and their application has been released by the Cloud Native Computing Foundation. 1.15 Enhancements Lead Kenny Coleman goes into detail on all of the new features briefly outlined above.

Is Kubernetes 1.15 An Improvement On 1.14?

The strength of the Kubernetes community and the robust processes that have been set up for the proposal of new features through the community’s Special Interest Groups (SIGs), then Alpha to Beta to Stable introduction has resulted in an almost guarantee that each new iteration of the platform improves on the former. Kubernetes 1.15 is no exception.

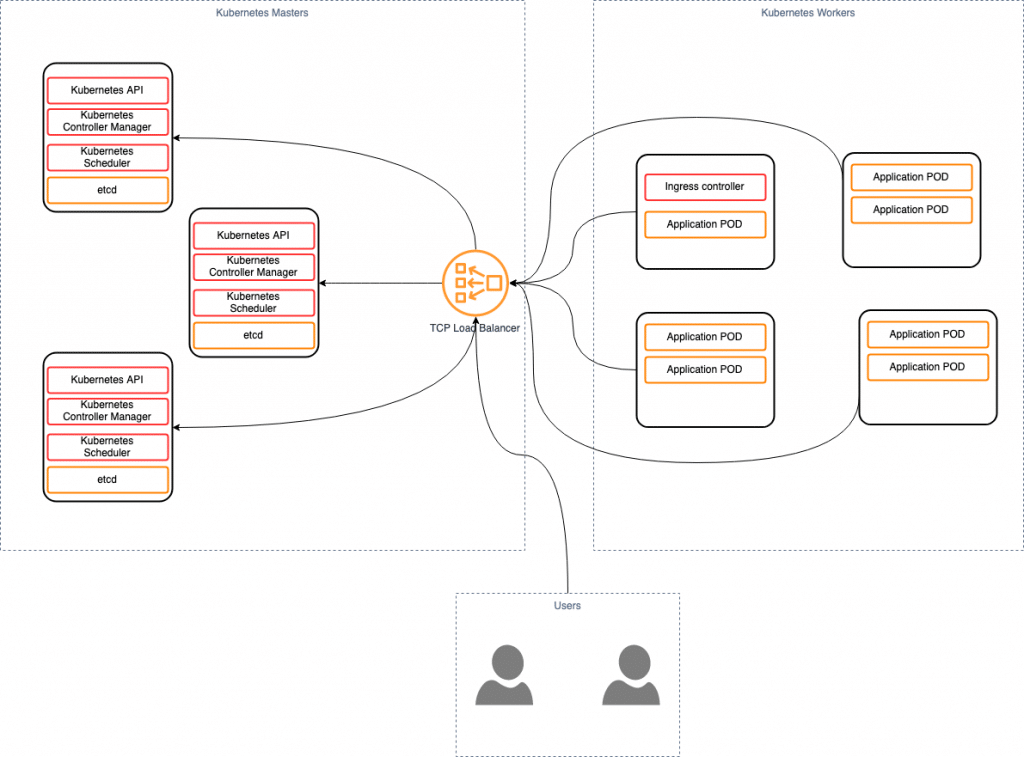

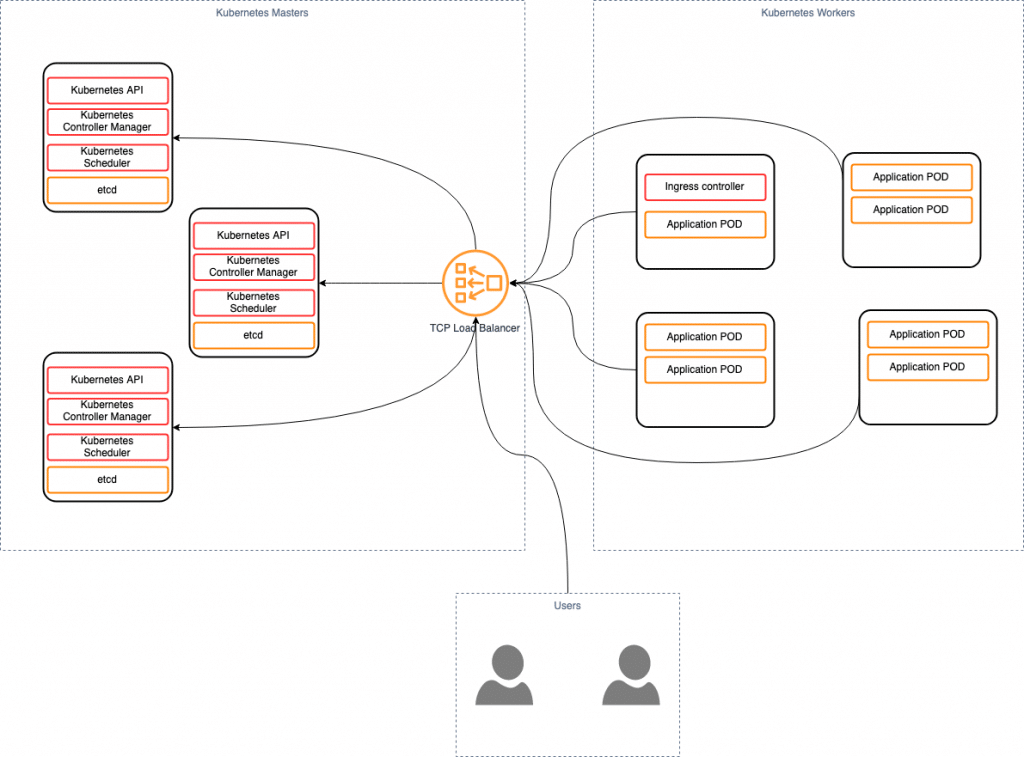

Kubernetes High-Availability Mode

For cloud-native apps to be built in a way that avoids the risk of any single point of failure becoming a possibility, Kubernetes, along with its supporting components, must be set up in high availability mode. This involves the use of multi-master clusters rather than a single master cluster that can fail. Multi-master clusters on the other hand make use of multiple master nodes – all with equal access to the same worker nodes.

If a key component such as the API server controller manager lies on a single master node its failure prevents the creation of any more services, pods and so on. A high availability Kubernetes environment replicates key components across multiple (usually 3) masters. Like the engines on a jet, even if one master fails, those remaining will ensure the cluster keeps running.

Technical Benefits Of Kubernetes 1.15 High Availability Mode

- Fault tolerance

- Rapid app deployment

- Observation

- Horizontal Scaling

- AutoDiscovery

- Flexible utility – Kubernetes can be used across monolith and microservices applications and in public, private or hybrid cloud environments

Technical Risks Of Kubernetes 1.15 High Availability Mode

The only real downside to Kubernetes high-availability mode (when a high availability and resilience to fault tolerance requirement justifies the overhead) is that the recovery process is complex and requires access to experienced engineers.

Kubernetes 1.15 Overheads

Employing Kubernetes does involve an overhead that is higher than alternatives such as virtual machines. However, the automation, deployment and cloud resource efficiencies it offers means that the fixed cost should always be more than compensated if used in the appropriate circumstances. Add to that the potential cost to a business of using a less high availability and fault tolerant technology and the business case is usually self-explanatory.

How to Run Kubernetes 1.15 in High Availability Mode – A Technical Implementation Step-by-Step Guide

Architecture scheme:

We will have 3 servers for master nodes, 2 servers for worker nodes and 1 server for load balancer:

| Name | IP |

| master-1 | 10.0.0.1 |

| master-2 | 10.0.0.2 |

| master-3 | 10.0.0.3 |

| load-balancer | 10.0.0.4 |

| worker-1 | 10.0.0.5 |

| worker-2 | 10.0.0.6 |

NGINX load balancer

We will use NGINX as a TCP balancer.

Add nginx repository:

vi /etc/yum.repos.d/nginx.repo

[nginx] name=nginx repo baseurl=http://nginx.org/packages/centos/$releasever/$basearch/ gpgcheck=0 enabled=1

Installing nginx:

yum install -y nginx

Edit nginx.conf:

events { }

stream {

upstream stream_backend {

least_conn;

server 10.0.0.1:6443;

server 10.0.0.2:6443;

server 10.0.0.3:6443;

}

server {

listen 6443;

proxy_pass stream_backend;

}

}Restart nginx server:

systemctl nginx restart

Kubernetes Servers Prep

Preparing Kubernetes servers based on centos 7.

Add docker repository:

yum-config-manager --add-repo https://download.docker.com/linux/centos/docker-ce.repo

Install docker:

yum install -y yum-utils device-mapper-persistent-data lvm2 yum install -y docker-ce-18.09.8 docker-ce-cli-18.09.8 containerd.io

Start the docker service and enable autostart:

systemctl enable docker systemctl start docker

Create configuration file for docker:

vi /etc/docker/daemon.json

{

"exec-opts": ["native.cgroupdriver=systemd"],

"storage-driver": "overlay2",

"storage-opts": [

"overlay2.override_kernel_check=true"

],

"experimental":true

}Restart docker to apply the new configuration:

systemctl restart docker

Add the kubernetes repository:

vi /etc/yum.repos.d/kubernetes.repo

[kubernetes] name=Kubernetes baseurl=https://packages.cloud.google.com/yum/repos/kubernetes-el7-x86_64 enabled=1 gpgcheck=1 repo_gpgcheck=1 gpgkey=https://packages.cloud.google.com/yum/doc/yum-key.gpg https://packages.cloud.google.com/yum/doc/rpm-package-key.gpg

Install kubernetes packages:

yum install -y kubelet kubeadm kubectl

Add kubelet to autostart and start it:

systemctl enable kubelet systemctl start kubelet

Edit systctl:

cat <<EOF > /etc/sysctl.d/k8s.conf net.ipv4.ip_forward = 1 net.bridge.bridge-nf-call-ip6tables = 1 net.bridge.bridge-nf-call-iptables = 1 EOF

Apply sysctl:

sysctl --system

Master nodes:

Create the kubeadm directory:

mkdir /etc/kubernetes/kubeadm

In this directory, create a configuration file for initialize the cluster:

vim /etc/kubernetes/kubeadm/kubeadm-config.yaml

apiVersion: kubeadm.k8s.io/v1beta1 kind: ClusterConfiguration kubernetesVersion: stable controlPlaneEndpoint: "10.0.0.4:6443" networking: podSubnet: 192.168.0.0/16

| kubernetesVersion | kubernetes version |

| controlPlaneEndpoint | API IP, set IP of Load Balancer |

| podSubnet | Network for pods |

Initialize cluster with config file and flag –upload-certs flag. Since version 1.14, it became possible to upload certificates to etcd.

kubeadm init --config=/etc/kubernetes/kubeadm/kubeadm-config.yaml --upload-certs

Create a directory and put the configuration file there to connect to the kubernetes API:

mkdir -p $HOME/.kube sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config sudo chown $(id -u):$(id -g) $HOME/.kube/config

Check our API:

kubectl get nodes

Output:

NAME STATUS ROLES AGE VERSION master-1 NotReady master 1m23s v1.15.12

Next, copy command for join masters and run it on servers 2 and 3:

kubeadm join 10.0.0.4:6443 --token n7xoow.oqcrlo5a3h2rawxq \ --discovery-token-ca-cert-hash sha256:c0ad04b8bd64e793b615cdac5c51c2552e9be69146d3191f196c78881e9e85ba \ --control-plane --certificate-key 1a187ef8f80b94a03265c2cd65fa9cac10714f9c3507438b7785e31b120862e7

After successful completion, we will see new masters:

kubectl get nodes

Output:

NAME STATUS ROLES AGE VERSION master-1 NotReady master 5m23s v1.15.12 master-2 NotReady master 4m51s v1.15.12 master-3 NotReady master 1m22s v1.15.12

Certificates will be deleted after 2 hours, token for join will be deleted after 24 hours, token for uploading certificates will be deleted after 1 hour

Look at token:

kubeadm token list

Output:

TOKEN TTL EXPIRES USAGES DESCRIPTION EXTRA GROUP n7xoow.oqcrlo5a3h2rawxq 23h 2019-08-07T21:47:34+02:00

| TOKEN | TtL | EXPIRES | USAGES | DESCRIPTION | EXTRA GROUPS |

| n7xoow.oqcrlo5a3h2rawxq | 23h | 2019-08-07T21:47:34+02:00 | authentication,signing | <none> | system:bootstrappers:kubeadm:default-node-token |

| q4rc7k.7ratynao0w2caguz | 1h | 2019-08-06T23:47:33+02:00 | <none> | Proxy for managing TTL for the kubeadm-certs secret | <none> |

Delete tokens:

kubeadm token delete n7xoow.oqcrlo5a3h2rawxq kubeadm token delete q4rc7k.7ratynao0w2caguz

Create token with 10 minute TTL:

kubeadm token create --ttl 10m --print-join-command

Output:

kubeadm join 10.0.0.4:6443 --token am19v8.zov3nu6od7js4t2x --discovery-token-ca-cert-hash sha256:c0ad04b8bd64e793b615cdac5c51c2552e9be69146d3191f196c78881e9e85ba

Run the upload-certs phase:

kubeadm init phase upload-certs --experimental-upload-certs

Output:

[upload-certs] Storing the certificates in Secret "kubeadm-certs" in the "kube-system" Namespace [upload-certs] Using certificate key: b904b8248991eebe9c783b55a353fb95825e4e2823cb741522f2f4dcdcce5527

New Master Nodes can be added with command:

kubeadm join 10.0.0.4:6443 \ --token am19v8.zov3nu6od7js4t2x \ --discovery-token-ca-cert-hash \ sha256:c0ad04b8bd64e793b615cdac5c51c2552e9be69146d3191f196c78881e9e85ba --control-plane \ --certificate-key b904b8248991eebe9c783b55a353fb95825e4e2823cb741522f2f4dcdcce5527

finished with Masters Nodes, switch to worker nodes

Worker Nodes

Using the same principle as for the Master Nodes, run the join command on all Worker Nodes:

kubeadm join 10.0.0.4:6443 --token am19v8.zov3nu6od7js4t2x \ --discovery-token-ca-cert-hash sha256:c0ad04b8bd64e793b615cdac5c51c2552e9be69146d3191f196c78881e9e85ba

After adding all servers you should see the following commands in the output:

kubectl get nodes

Output:

NAME STATUS ROLES AGE VERSION master-1 NotReady master 51m23s v1.15.12 master-2 NotReady master 49m51s v1.15.12 master-3 NotReady master 48m22s v1.15.12 worker-1 NotReady master 2m22s v1.15.12 worker-2 NotReady master 1m22s v1.15.12

All servers added, but they are in Not Ready status. This is due to a lack of network. In our example, we will use the calico network. Let’s install it in our cluster:

curl https://docs.projectcalico.org/v3.8/manifests/calico.yaml -O kubectl apply -f calico.yaml

Check:

kubectl get nodes

Output:

NAME STATUS ROLES AGE VERSION master-1 Ready master 51m23s v1.15.12 master-2 Ready master 49m51s v1.15.12 master-3 Ready master 48m22s v1.15.12 worker-1 Ready master 2m22s v1.15.12 worker-2 Ready master 1m22s v1.15.12

Kubernetes 1.15 In HA Mode Invaluable to Reliability Engineering

High availability is an important part of reliability engineering, focused on making system reliable and avoid any single point of failure of the complete system. At first glance, its implementation might seem quite complex, but HA brings tremendous advantages to the system that requires increased stability and reliability. Using a highly available cluster is one of the most important aspects of building a solid infrastructure.