In this blog, we’ll look at the pros and cons of both Cephfs and NFS as the choice of distributed file storage systems when setting up a Docker cluster on bare-metal servers as well as showing you how to do exactly that with both.

When K&C’s DevOps engineers build a Docker cluster to virtualise the development environment on a physical (bare-metal) server, the Cephfs vs NFS (Ceph filesystem vs. Network File System) question often arises. Which of the two distributed file storage systems should we use to store persistent data that should be available to all of the cluster’s servers? Without a distributed file storage system, the whole concept of Docker containers is compromised because the cluster will only function in high availability mode if supported by a distributed file storage system like those represented by both CEPHfs and NFS.

Any application placed on the cluster’s worker node should also have access to our data storage and continue to work in the case of a dropout, loss, or unavailability of one of the servers in the data storage cluster.

NFS or CephFS?

That a persistent storage solution is necessary, is clear. So the question is rather, CephFS or NFS as the optimal solution?

What is NFS?

The NFS – Network File System is one of the most commonly used data storage systems to meet our minimum requirements. It provides transparent access to files and server file systems. And it enables any client application able to work with a local file to also work with an NFS-file without any program modification.

NFS Server Scheme

From the scheme above, you can see that the NFS server contains data that is available to every server in the cluster. The scheme works well for projects involving modest data volumes and without the requirement for high-speed input/output.

What issues can you face when working with NFS?

Potential NFS Problem #1

The whole load goes to the hard drive, which is on the NFS server and to which all other servers on the cluster call and perform read/record operations.

Potential NFS Problem #2

A single endpoint to a server with data. In the case of a data server dropout, the possibility that our application will also fail increases respectively.

What is Ceph?

Ceph is one of the most advanced and popular distributed file and object storage systems. It is an Open Source software-defined remote file system owned by DevOps and cloud development specialists, the Red Hat Company

Key Ceph Features

- no single entry points;

- easily scalable to petabytes;

- stores and replicates data;

- responsible for load balancing;

- guarantees accessibility and system robustness;

- free (however, its developers might supply fee-based support);

- no need for special equipment (the system can be deployed at any data center).

How Ceph Works As A Data Storage Solution

1)RADOS – as an object.

2)RBD – as a block device.

3)CephFS – as a file, POSIX-compliant filesystem.

Access to the distributed storage of RADOS objects is given with the help of the following interfaces:

1)RADOS Gateway – Swift and Amazon-S3 compatible RESTful interface.

2)librados and the related C/C++ bindings.

3)rbd and QEMU-RBD – linux kernel and QEMU block.

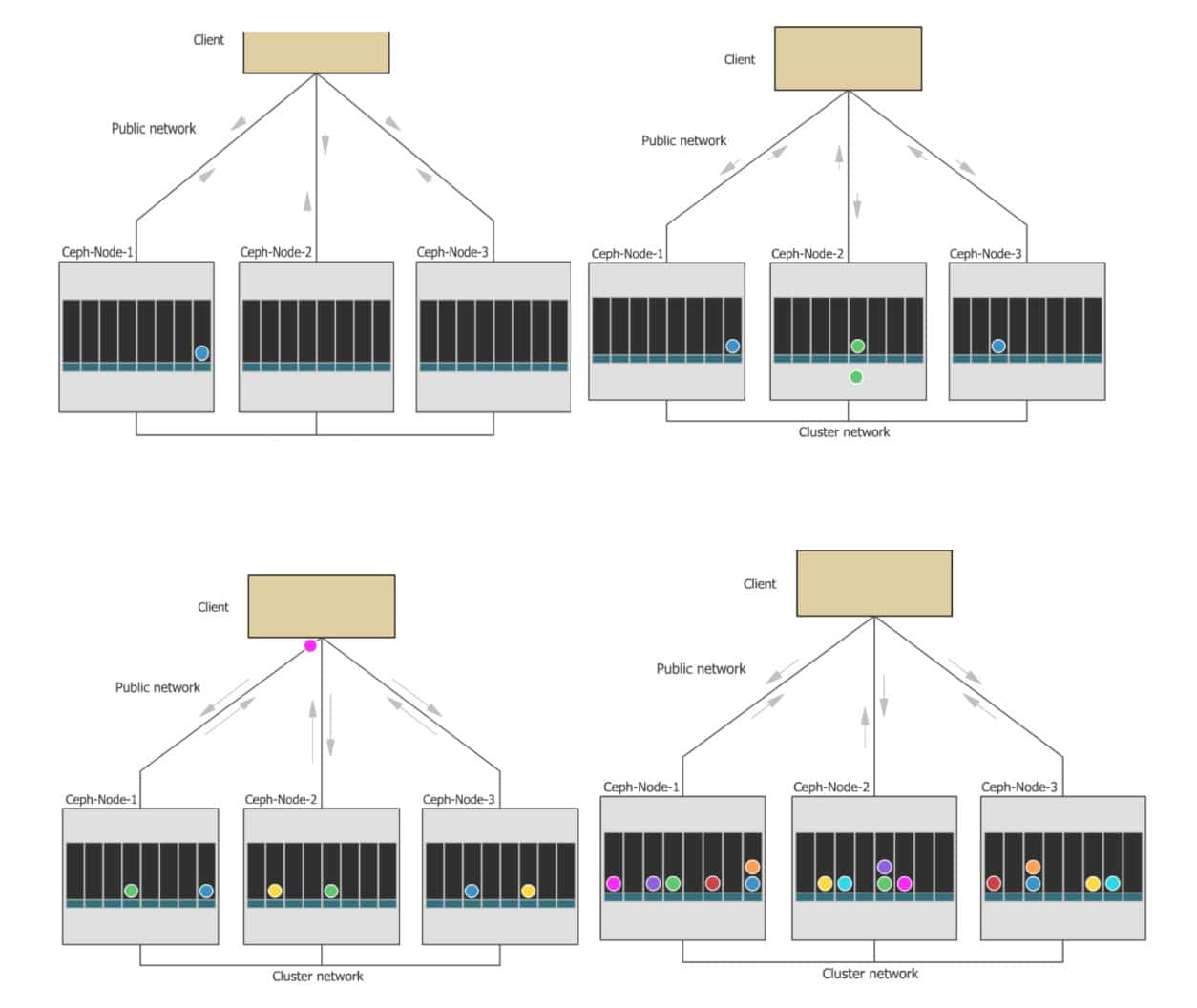

In the diagram below you can see how data placement is implemented in the Ceph cluster set up with Ceph Ansible with the replication x2:

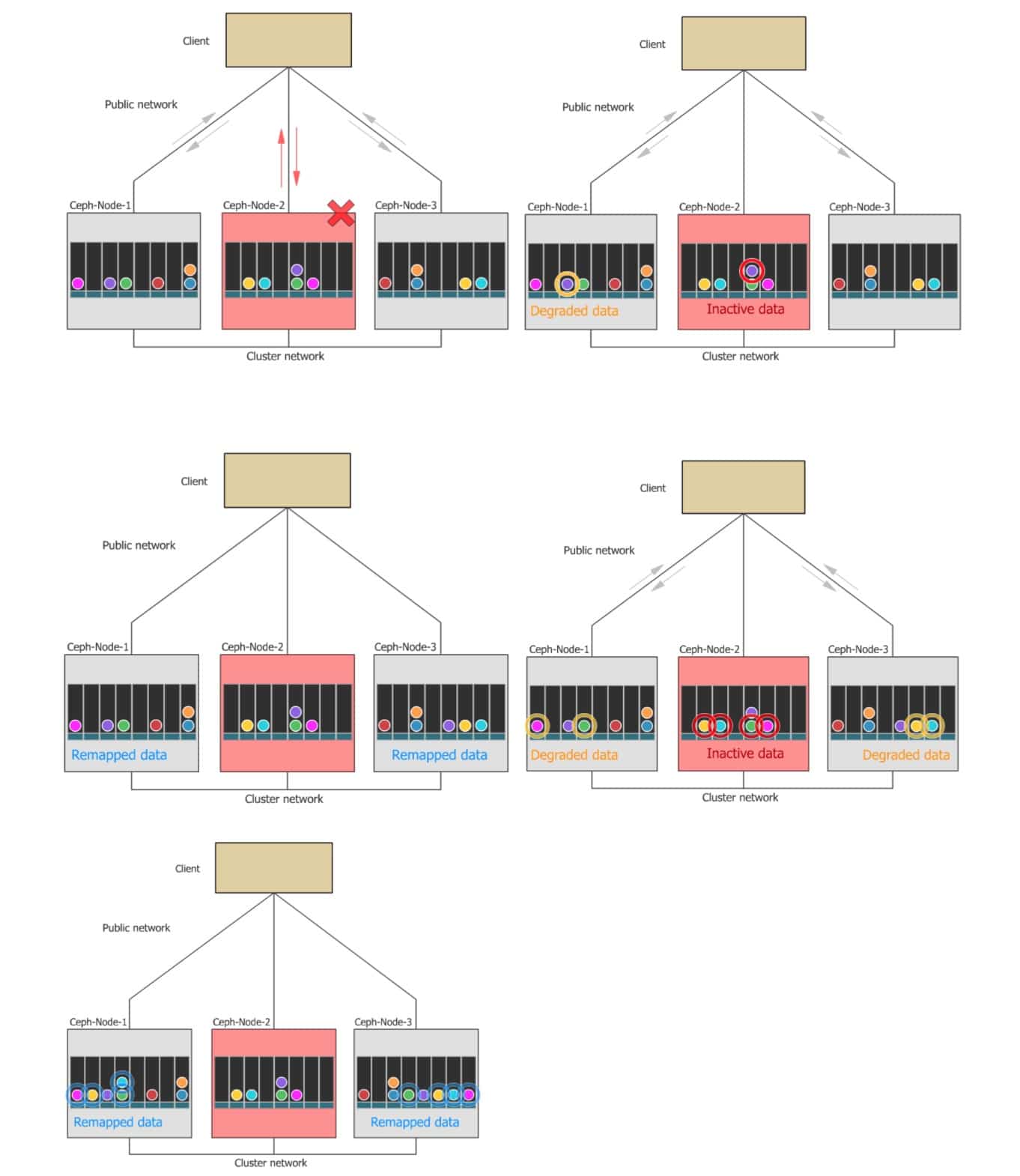

And here you can see how data is restored inside the cluster in case of the loss of a Ceph cluster node:

Ceph Use Cases

Ceph has gained a wide audience and some of the well-known companies to make use of CephFS are:

How Ceph works and infrastructure requirements

Ceph’s primary infrastructure requirement is the availability of a sustainable network connection between a cluster’s servers. The minimal requirement to the network is the presence of 1 Gb/s communications link between servers. With this, it’s recommended to use network interfaces with a bandwidth of at least 10 GB/s.

From our experience of building Ceph clusters, it’s worth mentioning that the network infrastructure requirements can cause bottlenecks in Docker clusters. Any problems in the network infrastructure can lead to delays in the receipt of data by customers, as well as slow down the cluster and lead to a rebalancing of data within it. We recommend placing the Ceph cluster servers in one server rack and making connections between the servers with the help of additional internal network interfaces.

Our experience at K&C also includes clusters built with the network channels at 1GB/s. These are not connected with internal interfaces and placed in different server racks situated in distinct data centres. Even with that less-than-ideal set-up, a cluster’s work can usually be regarded as satisfactory with it providing SLAs (service-level agreements) for data accessibility at 99.9%.

How to build a minimum requirements Docker cluster using CephFS

How would you go about building a minimum requirements Docker cluster using CephFS? In the given example, we’ll use a network interface 1 GB/s between the Ceph cluster servers. Clients are connected through the same network interface. The primary requirement, in this case, is to resolve the problems mentioned above, which can occur when the data storage scheme with the NFS server is implemented. For clients, the data will be provided as a file system.

In a scheme like the above, we have three physical servers with three hard drives, allotted to the Ceph cluster’s data. Hard drives are of the HDD type (not SSD), the volume is 6Tb, replication factor – x3. As a result, the total data volume amounts to 18 Tb. Each of the Ceph cluster’s servers, in turn, is an entry point to the cluster for end clients. This allows us to “lose” (server down / server maintenance /…) one of the Ceph cluster’s servers per unit time in order to not harm final client’s data and ensure they are available.

In case of the given scheme, we solve the problem represented by NFS as a single entry point to our data storage, as well as accelerate the speed of data operations.

Let’s test the throughput of our cluster using an example of the file record (size – 500 Gb) in the CEPH cluster from a client-server.

The graph shows that loading the file into the Ceph cluster takes a little over five hours. In addition to that, you should pay attention to the network interface downloading: it is loaded at 30% – 300Mbps, not 100% as you may have assumed. The reason for that is the limitation of the recording speed of HDD hard disks. You can achieve higher record/read response times when building a Ceph cluster using SSD drives, but the total cost of the cluster is significantly increased.

In Conclusion – CephFS or NFS as our data storage solution for a Docker cluster?

The choice between NFS and Ceph depends on a project’s requirements, scale, and will also take into consideration future evolutions such as scalability requirements. We’ve worked on projects for which Ceph was the optimal choice, and on others where the simpler NFS was more than enough to do the job.

Broadly speaking, in the case of small clusters where data loads are modest, NFS can be a cheap, easy and perfectly suitable choice. For larger projects where heavier data loads will be processed and stored, the more sophisticated Ceph solution will most likely be recommended.