Wenn Sie im Jahr 2022 eine Big-Data-Anwendung entwickeln, ist die Wahrscheinlichkeit groß, dass Sie die Wahl zwischen einer klassischen Cloud-Architektur auf der Basis von Containern und einer Serverless-Architektur abwägen werden. In diesem Beitrag befasst sich K&C’s Big Data & KI Beratung und Entwicklung mit der Frage, warum Serverless für Anwendungen oder Plattformen, die mit Big Data arbeiten, so beliebt werden.

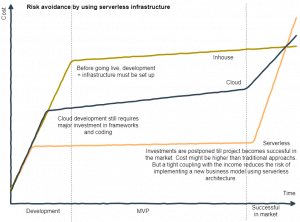

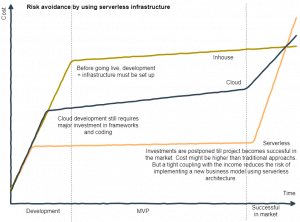

Sowohl Serverless als auch Container bieten enorme Kostenvorteile im Vergleich zu früheren Methoden. Bevor die Ära kostengünstiger, skalierbarer öffentlicher Cloud-Plattformen begann (man vergisst oft, dass AWS erst 2006 auf den Markt kam und mehrere Jahre brauchte, um sich zu etablieren), waren die durch Big-Data-Analysen gewonnenen Erkenntnisse aufgrund der damit verbundenen Infrastrukturkosten praktisch auf große Unternehmen beschränkt. Außerdem gab es generell viel weniger Daten, da die vernetzten Geräte, die heute so viele Daten produzieren, ebenfalls eine Konsequenz der Cloud-Computing-Revolution sind.

Die Cloud-Entwicklung führte zur Etablierung von Containern, die es ermöglichten, Anwendungen in die kleinsten sinnvollen unabhängigen Module zu zerlegen und Geschwindigkeit, Effizienz sowie Zuverlässigkeit zu verbessern. Bei Big-Data-Projekten bestand der Vorteil von Containern, die in VMs ausgeführt werden, gegenüber den zuvor verwendeten containerlosen VMs auch darin, dass sie es ermöglichten, Big-Data-Anwendungen unabhängig von Hardware und GPU zu betreiben und die Effizienz der GPU-Aufteilung zu verbessern.

Serverless geht noch einen Schritt weiter und bietet Container-as-a-Service, wodurch die Einrichtung und Verwaltung der zugrunde liegenden VM-Infrastruktur entfällt. Das Softwareentwicklungsteam muss dann nur noch mit dem Container interagieren.

Die Experten von K&C sind der Meinung, dass eine Serverless-Architektur zwar noch nicht immer die richtige Lösung für Big-Data-gestützte KI-Anwendungen ist, sich die Technologie aber in diese Richtung bewegt.

In diesem Artikel untersuchen wir, warum sich Serverless so gut für die Verarbeitung von Big Data eignet. Zudem erfahren Sie, warum die Serverless-Architektur bei der App-Entwicklung im Allgemeinen so beliebt ist.

Können wir Ihnen bei Ihrem nächsten Softwareentwicklungsprojekt helfen?

Flexible Modelle für Ihre Bedürfnisse!

Die Entwicklung der Big-Data-Infrastruktur – von Hadoop zur Cloud und von Containern zu Serverless

2012 veröffentlichte Forbes einen Gastbeitrag über den Aufstieg von Big Data, verfasst von John Bantleman, CEO des Datenbanksoftwareunternehmens Rainstor. Das „Zeitalter von Big Data“ wurde ausgerufen. Bantleman schrieb:

„Wir sind im Zeitalter von Big Data angekommen, in dem jeden Tag neue Geschäftsmöglichkeiten entdeckt werden, weil innovative Datenverwaltungstechnologien es den Unternehmen jetzt ermöglichen, alle Arten von Daten zu analysieren“.

Bantleman warnte jedoch auch davor, dass die Geschäftsmöglichkeiten, die Big Data eröffnet, mit Kosten verbunden sind, die noch nicht absehbar sind. Das Sammeln, Speichern, Verarbeiten und Anwenden von Algorithmen für Künstliche Intelligenz und maschinelles Lernen für die Analyse der riesigen Mengen an semistrukturierten und unstrukturierten Daten, die generiert werden, erfordert enorme Rechenressourcen.

Herausforderungen der Big Data-Architektur

Die Infrastruktur jeder Anwendung, die für die Verarbeitung von Big Data entwickelt wird, muss die folgenden Fragen beantworten und die beschriebenen Herausforderungen bewältigen:

- Welches Datenvolumen muss Ihre Anwendung möglicherweise verarbeiten können?

- Wie viel Rechenleistung brauchen Sie?

- Wie schnell wird das Datenvolumen voraussichtlich ansteigen?

- Ist mit einem ungleichmäßigen Datenfluss zu rechnen, bei dem die Datenströme zu verschiedenen Zeitpunkten während des Tages und/oder saisonal ansteigen oder abfallen?

- Fließen die Daten aus verschiedenen Quellen und in uneinheitlichen Formaten, z. B. strukturiert und unstrukturiert, und enthalten diese Daten Anomalien oder müssen unnötige „Stördaten“ herausgefiltert werden?

Vor dem Aufstieg von Cloud und Serverless, die Speicher- und Rechenressourcen als Dienstprogramm anboten, bedeutete die Verarbeitung von Big Data den Aufbau und die Wartung der entsprechenden Serverinfrastruktur. Diese musste groß genug sein, um Spitzen (Peaks) im Datenfluss zu bewältigen, auch wenn sie nur gelegentlich auftraten.

Gerade die Anomalien wie Peaks und Tiefpunkte im Datenfluss bieten oft die wertvollsten wissenschaftlichen oder kommerziellen Erkenntnisse. Eine Anwendung, die in der Lage ist, gelegentliche Peaks zu bewältigen, bedeutete jedoch, für eine teure Infrastruktur zu bezahlen und diese zu pflegen, welche die meiste Zeit überflüssig wäre.

Erst Hadoop und dann das Cloud Computing änderten dies. Durch die Verteilung von Big Data-Sets auf viele billige „Commodity-Server“-Knoten, die sich zu einer Rechenressource zusammenschließen und riesige Datensätze speichern sowie verarbeiten können, senkte Hadoop die Kosten für eine skalierbare Bare-Metal-Infrastruktur erheblich.

In dem Forbes-Artikel von Bantleman aus dem Jahr 2012 wird geschätzt, dass ein Hadoop-Cluster und eine Verteilungseinrichtung für Big Data etwa 1 Million Dollar kosten, während die Kosten für Data Warehouses in Unternehmen zwischen 10 und 100 Millionen Dollar liegen. Natürlich ist 1 Million Dollar immer noch kein Kleingeld und stellte eine Einstiegshürde dar, die die meisten von Big-Data-Anwendungen abhielt.

Als nächstes kamen Cloud Computing und Container auf. Cloud-Anbieter wie AWS verwandelten Rechenleistung in einen Service und machten damit größere Vorabinvestitionen in die Hardware-Infrastruktur überflüssig. Das Pay-as-you-go-Modell und die fließende Skalierbarkeit öffentlicher Cloud-Plattformen wie AWS öffneten die Tür für Experimente und Innovationen, die zu einem reichhaltigen Open-Source-Entwicklungsökosystem führten.

Es ermöglichte auch vielen jungen Unternehmen, die Big Data nutzen, zu wachsen und zu expandieren, die andernfalls mit viel höheren Eintrittsbarrieren zu kämpfen gehabt hätten.

Cloud Computing bedeutete, dass keine Vorabinvestitionen getätigt werden mussten und nur die Rechenleistung bezahlt werden konnte, die für unregelmäßige Datenstromspitzen benötigt wurde, wenn diese auftraten.

Big Data-gestützte KI gibt der Menschheit das Wissen, um die Welt zu verändern

Die Demokratisierung von Big Data durch Cloud Computing kann als Katalysator für eine neue technologische Revolution angesehen werden. Eine, die sowohl die Digitaltechnik als auch die Biotechnologie umfasst. In der Medizin, der Pharmazie, dem Finanzwesen, dem Handel, der Landwirtschaft, der Lebensmitteltechnologie und so ziemlich jedem anderen Sektor, finden revolutionäre Entwicklungen statt, weil Start-ups und KMU nun in der Lage sind, Big-Data-Anwendungen zu entwickeln und zu betreiben.

Das maschinelle Lernen, das Muster aufspürt, die zuvor nicht erkennbar waren, beschleunigt plötzlich neue Entdeckungen.

Innerhalb weniger Jahrzehnte wird die Welt, in der wir leben, nicht mehr wiederzuerkennen sein. Ja, die Technologie hat sich in den vergangenen Jahrzehnten schnell weiterentwickelt. Aber was Cloud-gestützte Big Data und KI in den nächsten Jahren erreichen werden, wird ein Paradigmenwechsel sein.

Wir werden in der Lage sein, mit ziemlicher Sicherheit einen Großteil der bisher unheilbaren Krankheiten und Beschwerden zu heilen. Das menschliche Genom und das anderer Lebensformen wird entziffert werden. Autonome Fahrzeuge werden die Wirtschaft und unseren Lebensstil mehr verändern, als die meisten sich das heute vorstellen können. Der elektronische Geschäftsverkehr wird zu einem wirklich persönlichen Erlebnis werden. Die Liste lässt sich fortsetzen.

Cloud Computing hat Big Data aus Kostengründen demokratisiert – Serverless-Datenbanken senken die Lernbarriere

Doch so sehr das Cloud Computing die Einstiegshürden für Big Data und die darauf aufbauende KI auch gesenkt hat, so gibt es doch immer noch einen Engpass. Die Cloud hat die Kosten enorm gesenkt und Container-Orchestratoren wie Kubernetes haben dazu beigetragen, Anwendungen effizienter und flexibler zu machen.

Doch die Einrichtung und Wartung der Cloud-Infrastruktur, auf der containerisierte Big-Data-Anwendungen laufen, ist immer noch sehr schwierig. Der Hauptvorteil liegt in der Geschwindigkeit und dem Zeitaufwand für die Wartung der Architektur. Für Container werden jedoch Spezialisten mit sehr spezifischen Fähigkeiten benötigt, die nicht leicht zu finden sind. Diese Experten sind teuer, da sie entweder „von der Stange“ oder als Investition in Weiterbildung eingestellt werden müssten, zudem sind diese Experten Mangelware.

Die explosionsartige Verbreitung von IoT (Internet of Things) in so ziemlich jedem denkbaren Sektor bedeutet eine enorme Nachfrage nach Cloud-Architekten und DevOps-Ingenieuren, die in der Lage sind, eine Container-Infrastruktur aufzubauen. Alle fischen im selben flachen Becken, und die Schwierigkeit und die Kosten für die Einstellung der für die Verwaltung der Container-Infrastruktur erforderlichen Fähigkeiten sind zu einem strategischen Problem geworden.

Serverless reduziert die Komplexität und Kosten von Big-Data-Anwendungen

Der Hauptvorteil des Serverless Computing gegenüber der herkömmlichen Cloud-basierten oder Server-zentrierten Infrastruktur besteht darin, dass die Entwickler sich nicht mehr mit dem Kauf, der Bereitstellung und der Verwaltung von Backend-Servern befassen müssen, was zu einer schnelleren Freigabezeit und weniger laufender Wartung führen kann. Das senkt den Entwicklungsaufwand, und unter den richtigen Umständen können mit Serverless auch die Cloud-Kosten gesenkt werden, da die Abrechnung ausschließlich auf der Grundlage der tatsächlich genutzten Ressourcen erfolgt, ohne dass Gemeinkosten für die Wartung ungenutzter Kapazitäten anfallen.

Abgesehen von den Cloud-Kosten ermöglichen Serverless-Architekturen eine schnellere Bereitstellung und Aktualisierung von Anwendungen, da der Code nicht auf Servern bereitgestellt oder Backends neu konfiguriert werden müssen, um eine funktionierende Version zu veröffentlichen. Da es sich bei der Anwendung um eine Sammlung von Funktionen handelt, die vom Serverless-Anbieter bereitgestellt werden und nicht um einen monolithischen Stack, können Entwickler den Code entweder auf einmal oder eine Funktion nach der anderen freigeben.

Auf diese Weise ist es möglich, eine Live-Anwendung schnell zu aktualisieren, zu korrigieren oder neue Funktionen hinzuzufügen, indem Änderungen an Funktionen vorgenommen oder Funktionen hinzugefügt werden.

Aufbau einer Serverless-Architektur für Big Data

Bei der Cloud-Entwicklung wurde die Pay-per-Use-Methode eingeführt, bei der nur so viele Instanzen wie nötig hochgefahren und nach Beendigung eines Auftrags wieder gelöscht werden, so dass keine weiteren Kosten anfallen. Bei herkömmlichen Cloud-Diensten muss der Nutzer die benötigten virtuellen Maschinen jedoch immer noch manuell hochfahren, so dass stets Infrastrukturspezialisten für die Wartung der Instanzen zur Verfügung stehen müssen.

Die Functions-as-a-Service (FaaS)-Dienste von Serverless-Anbietern wie AWS Lambda, Google Cloud Functions und Azure Functions automatisieren die Skalierung, indem sie Ereignisse zum Auslösen von Aktionen nutzen. Sie können auch Datenverarbeitung ähnlich wie bei traditionellem Hadoop durchführen, ohne das Hadoop-Framework einzubeziehen.

Andere Serverless-Dienste werden in FaaS eingeführt, um eine Datenpipeline aufzubauen, die aus folgenden Elementen besteht:

- Datenerfassung

- Datenströme und Verarbeitung

- Speicherung von Daten

Alle wichtigen Serverless-Anbieter, AWS, Azure und GCP, haben ihre eigenen Services für jede Phase eines Big Data-Prozesses.

Datenerfassung

Einige Big-Data-Anwendungen werden mit Daten gefüttert, die bereits gesammelt und/oder formatiert und strukturiert wurden. Andere integrieren Live-Daten, die in Echtzeit von IoT-Geräten erzeugt werden, oder Daten, die von einer Anwendung selbst generiert werden, wie Protokolle oder Change Data Capture (CDC).

Zu den von den wichtigsten Serverless-Anbietern angebotenen Datenerfassungsdiensten gehören:

Amazon Web Services – AWS Glue, AWS IoT, AWS datapipeline

Microsoft Azure – Azure DataFactory, Azure IoT hub

Google Cloud Platform – Google Cloud DataFlow

Datenströme

Der Fluss von Streaming-Daten in eine Serverless-Anwendung, die eine Verarbeitung und Analyse in Echtzeit ermöglicht. Die Serverless-Architektur muss einen Echtzeitspeicher umfassen, der bei schwankendem Datenaufkommen nach oben und unten skaliert werden kann. Außerdem gibt es Dienste für die Datenverarbeitung in Echtzeit.

Zu den Datenströmen und Verarbeitungsdiensten, die von den wichtigsten Serverless-Anbietern bereitgestellt werden, gehören:

Amazon Web Services – Amazon Kinesis, Amazon Managed Streaming für Apache Kakfa (Amazon MSK) und Amazon Kinesis Streams für die Echtzeit-Datenverarbeitung.

Microsoft Azure – Event Hub and Azure Stream Analytics für die Datenverarbeitung in Echtzeit.

Google Cloud Platform – PUB/SUB und Google Cloud DataFlow für die Datenverarbeitung in Echtzeit.

Datenspeicherung

Auf der Speicherebene bietet Serverless vollständig verwaltete BaaS-Datenbanken, die die Skalierung von Tabellen zur Kapazitätsanpassung und Leistungserhaltung automatisieren sowie über integrierte Verfügbarkeit und Fehlertoleranz verfügen. Serverless-Datenbanken ermöglichen auch eine Entkopplung von Rechen- und Speicherknoten.

Die wichtigsten Serverless-Datenbankdienste der drei Hauptanbieter sind:

Amazon Web Services – AWS DynamoDB, AWS Aurora, AWS Athena

Microsoft Azure – Azure Cosmos DB, Azure SQL Database Serverless

Google Cloud Platform – Google BigQuery, Google BigTable, Google Datastore.

K&C – können wir Ihnen bei der Entwicklung Ihrer nächsten Serverless-App helfen?

Als Early Adopter des Serverless-Ansatzes hat sich K&C (Krusche & Company) in mehr als 20 Jahren einen Ruf als einer der vertrauenswürdigsten IT-Dienstleister in München, Deutschland und Europa erarbeitet. Wir betreiben Tech-Talent-Zentren in Krakau, Polen, Kiew, Ukraine, und Minsk. Wir kombinieren deutsches Management und Firmenpräsenz mit kosteneffizientem Zugang zu Nearshore-IT-Talenten im Ausland.

Wenn Sie einen Serverless-Ansatz für Ihre nächste Big-Data-Anwendung in Betracht ziehen und eine Expertenmeinung über die Eignung einer Serverless-Architektur oder ein engagiertes Team für die End-to-End-Entwicklung wünschen, kontaktieren Sie uns gerne!

K&C - Wir schaffen innovative Tech-Lösungen seit über 20 Jahren.

Kontaktieren Sie uns, um Ihre individuellen Bedürfnisse oder Ihr nächstes Projekt zu diskutieren.